Training and integrating a custom text classifier to a spaCy pipeline

Customize spaCy pipelines with your custom components

Introduction

spaCy is a Natural Language Processing (NLP) library and framework to productionalize Machine Learning and NLP applications.

There are loads of resources on training a spaCy component such as a NER, text classification or other basic NLP components, but I couldn't find one that finished the work well, i.e. where you'd end up with a full-fledged pre-trained model for common components such as Dependency Parsing, POS tagging or NER and a custom component to predict a specific task.

This is what this notebook / post is here to do: guide you through the whole process of configuring a text classification component, training it from python and integrating it into a fully featured pre-trained model, to be reused from anywhere else.

%pip install -q "spacy>3.0.0" pandas sklearn

We'll also need to download a pretrained model from spaCy english models: https://spacy.io/models/en#en_core_web_md. The following command needs to be executed from the same environment as your notebook kernel.

!python -m spacy download en_core_web_md

The data & the classification task

We'll work from dataset which is extracted through the reddit API. I've prepared it so that it can be easily imported and converted into spacy docs.

You can find the dataset here.

The dataset is a simple extract from Reddit of different posts body from a selection of subreddits related to data science. The objectif of our task will be to guess from the text to which subreddit the post comes from. Even though the interest is limited, this is a good use case to start with and easy to obtain labelled data from.

Let's load the dataset to inspect what is inside.

import pandas as pd

pd.options.display.max_colwidth = None

pd.options.display.max_rows = 3

data = pd.read_csv("spacy_textcat/reddit_data.csv")

data

cats = data.subreddit.unique().tolist()

cats

The dataset is composed of ~700 cleaned texts along with the subredddit they've been extracted from on Reddit. Let's now create the spaCy training / validation data by annotating spaCy docs created from the texts.

from typing import Set, List, Tuple

from spacy.tokens import DocBin

import spacy

# Load spaCy pretrained model that we downloaded before

nlp = spacy.load("en_core_web_md")

# Create a function to create a spacy dataset

def make_docs(data: List[Tuple[str, str]], target_file: str, cats: Set[str]):

docs = DocBin()

# Use nlp.pipe to efficiently process a large number of text inputs,

# the as_tuple arguments enables giving a list of tuples as input and

# reuse it in the loop, here for the labels

for doc, label in nlp.pipe(data, as_tuples=True):

# Encode the labels (assign 1 the subreddit)

for cat in cats:

doc.cats[cat] = 1 if cat == label else 0

docs.add(doc)

docs.to_disk(target_file)

return docs

Let's now split the dataset into training and validation datasets, and store them for training

from sklearn.model_selection import train_test_split

X_train, X_valid, y_train, y_valid = train_test_split(data["text"].values, data["subreddit"].values, test_size=0.3)

make_docs(list(zip(X_train, y_train)), "train.spacy", cats=cats)

make_docs(list(zip(X_valid, y_valid)), "valid.spacy", cats=cats)

The recommended training workflow with spaCy uses config files. They enable to configure each component of the model pipeline, set which components can be trained etc.

We'll use this configuration file, which uses the default text classifier model from spaCy. It was generated through this spaCy tool: https://spacy.io/usage/training#quickstart and customized to use the default text classification model from: https://spacy.io/api/architectures#TextCatBOW.

The important parts in the file are the following:

- The pipeline definition (under the

nlptag): The pipeline is composed only of a textcat component since it is the only one we have labelled data for and the only one we are going to train today. Also important to mention is the tokenizer component that we have left to the spaCy default, but could be customized. This is the only component that we need to train ourtextcatcomponent.

[nlp]

lang = "en"

pipeline = ["textcat"]

batch_size = 1000

disabled = []

before_creation = null

after_creation = null

after_pipeline_creation = null

tokenizer = {"@tokenizers":"spacy.Tokenizer.v1"}

- The model specification: Almost all parameters set to defaults except the

exclusive_classesset totruesince our posts only come from one subreddit (although that might be questionned). Note that compared to the config given in the documentation, we had to add thecomponents.textcatprefix to the headers.

[components.textcat]

factory = "textcat"

scorer = {"@scorers":"spacy.textcat_scorer.v1"}

threshold = 0.5

[components.textcat.model]

@architectures = "spacy.TextCatBOW.v2"

exclusive_classes = true

ngram_size = 1

no_output_layer = false

nO = null

More details on spaCy Pipelines

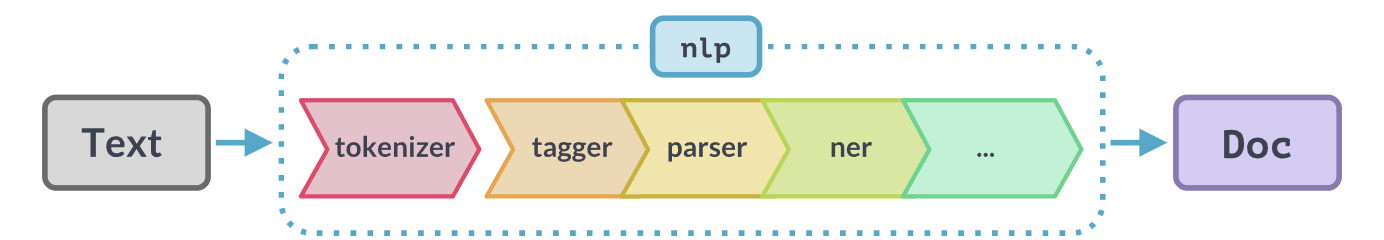

The spaCy pipeline is a modular and highly configurable workflow for processing texts. As shown below, there is a mandatory first step of tokenizing the text, then there are a succession of pipeline components (or pipes) that are executed in order, but do not necessarily rely on each other. It will be in the code of a component that the dependency would be set, e.g. by accessing previously set attributes in the doc element.

Unlike what can be found in most of the tutorials online or in the spaCy docs where training is started from CLI, we're going to train the component from a config file and from a python script. This has the advantage of letting us start the training programatically, e.g. from a data pipeline (using airflow, dagster or such).

However, we'll use the spaCy pre-built training function from spacy.cli.train in order to benefit from all the checks and logging that are set up in the CLI. (Another approach would be to reuse parts of the code, calling directly the spacy.training module instead)

from spacy.cli.train import train as spacy_train

config_path = "spacy_textcat/config.cfg"

output_model_path = "output/spacy_textcat"

spacy_train(

config_path,

output_path=output_model_path,

overrides={

"paths.train": "train.spacy",

"paths.dev": "valid.spacy",

},

)

We now have a trained classification model !

spaCy stores the model in folders, and usually saves both the best model and the last state of the model at the end of the training, in case we'd want to continue training from this step.

In the meta.json file in the model folder you can find the internal scores that were computed, and we can see that we have ~80% Macro F score and a nice .93 AUC.

We can import the newly trained pipeline from spaCy to predict like this:

import spacy

trained_nlp = spacy.load("output/spacy_textcat/model-best")

# Let's try it on an example text

text = "Hello\n I'm looking for data about birds in New Zealand.\nThe dataset would contain the birds species, colors, estimated population etc."

# Perform the trained pipeline on this text

doc = trained_nlp(text)

# We can display the predicted categories

doc.cats

We see that the model predicts the subreddit datasets with 84% confidence !

However, the rest of the trained pipeline is empty, NER, dependencies etc. have not been computed.

print("entities", doc.ents)

try:

print("sentences", list(doc.sents))

except ValueError as e:

print("sentences", "error:", e)

These are however, available with high quality in the pre-trained pipeline that we used earlier. But not the classification, obviously.

doc_from_pretrained = nlp(text)

print("entities", doc_from_pretrained.ents)

print("sentences", list(doc_from_pretrained.sents))

print("classification", doc_from_pretrained.cats)

The question is, then, how do we combine both pipelines without having to implement a lot of glue code for nothing ?

Integrate the new component to an existing pipeline

There are actually several ways to do this:

- Creating a pipe and loading the model from files, but this would require a different training process than what we did (on a model level and not pipeline level)

pipe = nlp.add_pipe("textcat")

pipe.from_disk("path/to/model/files") # Note, requires a different folder structure that what we've generated

- Loading the pipeline, saving the model to disk/bytes and loading it back again from disk/bytes in a new pipe in the pretrained pipeline

trained_nlp.get_pipe("textcat").to_disk("tmp")

nlp.add_pipe("textcat").from_disk("tmp")

# OR

nlp.add_pipe("textcat").from_bytes(

trained_nlp.get_pipe("textcat").to_bytes()

)

- Creating the pipe with a source pipeline.

nlp_merged = spacy.load("en_core_web_md")

nlp_merged.add_pipe("textcat", source=trained_nlp)

doc_from_merged = nlp_merged(text)

print("entities", doc_from_merged.ents)

print("sentences", list(doc_from_merged.sents))

print("classification", doc_from_merged.cats)

Removing the warning

To remove the vector mis-alignment, you'd have to train the pipeline by passing the pre-trained tok2vec component from the pre-trained model. In our case it's not a problem since the vectors are probably very similar, but nonetheless, I'll try to update the post when I can with the solution to this warning.From this point we can store and reuse the pipeline at will !